交付Dubbo服务到k8s,Dubbo是阿里巴巴开源的一个高性能优秀的服务框架,使得应用可通过高性能的 RPC 实现服务的输出和输入功能,可以和 Spring框架无缝集成。我们将其当作我们生产中的APP业务,交付到PaaS里。

交付架构图

架构详情

zk(zookeeper):dubbo服务的注册中心是通过zk来做的,我们用3个zk组成一个集群,就和etcd一样,有一个leader,两个slave,leader出问题的时候由其他来决定谁成为leader,因为zk是有状态的服务,所以将其放在集群外,集群内都是无状态的。

dubbo微服务:在集群内通过图形化操作扩容(dashboard),即当有秒杀或其他需求的时候就可以扩展, 过后则进行缩容。

git:开发将代码上传到git上,这里使用gitee,也可以使用github。

jenkins:用jenkins将git代码拉下来并编译打包成镜像,然后推送到harbor。

OPS服务器(200机器):将harbor的镜像通过yaml应用到k8s里,现在使用yaml文件,后续使用spinnaker做成图形化的形式。

用户(笑脸):外部访问通过ingress转发到集群内的dubbo消费者(web服务),然后就可以访问。

最终目标,图形化完成所有配置。

服务器角色分配

| 主机名 | 角色 | IP |

|---|---|---|

| HDSS7-11.host.com | k8s代理节点1,zk1 | 10.4.7.11 |

| HDSS7-12.host.com | k8s代理节点2,zk2 | 10.4.7.12 |

| HDSS7-21.host.com | k8s运算节点1,zk3 | 10.4.7.21 |

| HDSS7-22.host.com | k8s运算节点2,jenkins | 10.4.7.22 |

| HDSS7-200.host.com | k8s运维节点(docker仓库) | 10.4.7.200 |

zookeeper部署

zookeeper主要用来解决分布式应用中经常遇到的数据管理问题,如:统一命名服务,状态同步服务,集群管理,分布式应用配置项的管理等,简单来说zookeeper=文件系统+监听通知机制。

dubbo服务要注册到zk里,把配置放在zk上,一旦配置信息发生变化,zk将获取到的最新配置应用到系统中。

java环境安装

-

在11/12/21机器上,安装java环境,这里使用jdk8,可以前往官网进行下载:

[root@hdss7-21 ~]# cd /opt/src [root@hdss7-21 src]# mkdir -p /opt/src [root@hdss7-21 src]# mkdir /usr/java [root@hdss7-21 src]# scp root@hdss7-200:/opt/src/jdk-8u211-linux-x64.tar.gz . [root@hdss7-21 src]# tar xf jdk-8u211-linux-x64.tar.gz -C /usr/java [root@hdss7-21 src]# ln -s /usr/java/jdk1.8.0_211 /usr/java/jdk -

添加java环境变量,编辑

/etc/profile文件,在文件末尾添加以下内容:export JAVA_HOME=/usr/java/jdk export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar[root@hdss7-11 ~]# source /etc/profile [root@hdss7-11 ~]# java -version java version "1.8.0_211" Java(TM) SE Runtime Environment (build 1.8.0_211-b12) Java HotSpot(TM) 64-Bit Server VM (build 25.211-b12, mixed mode)

zookeeper安装

-

下载解压zookeeper:

[root@hdss7-12 src]# wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz [root@hdss7-12 src]# tar xf zookeeper-3.4.14.tar.gz -C /opt [root@hdss7-12 src]# cd /opt [root@hdss7-12 opt]# ln -s /opt/zookeeper-3.4.14 /opt/zookeeper [root@hdss7-12 opt]# mkdir -pv /data/zookeeper/data /data/zookeeper/logs -

创建并编辑zookeeper配置

/opt/zookeeper/conf/zoo.cfg,配置如下:tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper/data dataLogDir=/data/zookeeper/logs clientPort=2181 server.1=zk1.od.com:2888:3888 server.2=zk2.od.com:2888:3888 server.3=zk3.od.com:2888:3888 -

在11机器添加解析,编辑

/var/named/od.com.zone,serial前滚一位,最下面添加以下内容:zk1 A 10.4.7.11 zk2 A 10.4.7.12 zk3 A 10.4.7.21#重启named [root@hdss7-11 opt]# systemctl restart named [root@hdss7-11 opt]# dig -t A zk1.od.com @10.4.7.11 +short 10.4.7.11 -

在11/12/21机器配置myid,编辑

/data/zookeeper/data/myid文件,写入myid,11机器配置1,12配置2,21配置3:[root@hdss7-11 opt]# echo '1' > /data/zookeeper/data/myid [root@hdss7-12 opt]# echo '2' > /data/zookeeper/data/myid [root@hdss7-21 opt]# echo '3' > /data/zookeeper/data/myid -

启动zookeeper:

[root@hdss7-11 opt]# /opt/zookeeper/bin/zkServer.sh start [root@hdss7-11 opt]# ps aux|grep zoo [root@hdss7-11 opt]# netstat -tlunp|grep 2181

Jenkins部署

镜像准备

-

在200机器拉取jenkins镜像:

[root@hdss7-200 src]# docker pull jenkins/jenkins:2.263.1 [root@hdss7-200 src]# docker images|grep jenkins [root@hdss7-200 src]# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.263.1 [root@hdss7-200 src]# docker push !$ -

生成密钥,创建目录:

[root@hdss7-200 src]# ssh-keygen -t rsa -b 2048 -C "evobot@foxmail.com" -N "" -f /root/.ssh/id_rsa [root@hdss7-200 src]# mkdir /data/dockerfile [root@hdss7-200 src]# cd /data/dockerfile/ [root@hdss7-200 dockerfile]# mkdir jenkins [root@hdss7-200 dockerfile]# cd jenkins/ -

创建jenkins的Dockerfile文件,内容如下:

FROM harbor.od.com/public/jenkins:v2.263.1 USER root RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \ echo 'Asia/Shanghai' > /etc/timezone ADD id_rsa /root/.ssh/id_rsa ADD config.json /root/.docker/config.json ADD get-docker.sh /get-docker.sh RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config && \ /get-docker.sh -

准备文件:

[root@hdss7-200 jenkins]# cp /root/.ssh/id_rsa . [root@hdss7-200 jenkins]# cp /root/.docker/config.json . [root@hdss7-200 jenkins]# curl -fsSL get.docker.com -o get-docker.sh [root@hdss7-200 jenkins]# chmod +x get-docker.sh

gitee仓库配置

-

到gitee上新建一个

dubbo-demo-web仓库:

-

下载dubbo源码包,然后使用git提交到gitee仓库:

[root@hdss7-200 dubbo-demo-web-master]# git add . [root@hdss7-200 dubbo-demo-web-master]# git commit -m 'first commit' [root@hdss7-200 dubbo-demo-web-master]# git remote add origin https://gitee.com/evobot/dubbo-demo-web.git [root@hdss7-200 dubbo-demo-web-master]# git push -u origin "master" -

gitee添加公钥

[root@hdss7-200 dubbo-demo-web-master]# cat /root/.ssh/id_rsa.pub ssh-rsa xxxxx evobot@foxmail.com

-

harbor上新建

infra私有仓库:

镜像构建

-

在200机器上,开始使用dockerfile构建jenkins的镜像:

[root@hdss7-200 ~]# cd /data/dockerfile/jenkins/ [root@hdss7-200 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.263.1 [root@hdss7-200 jenkins]# docker push harbor.od.com/infra/jenkins:v2.263.1 #测试git仓库的密钥是否有效 [root@hdss7-200 jenkins]# docker run --rm harbor.od.com/infra/jenkins:v2.263.1 ssh -T git@gitee.com -

如果build镜像时提示部分软件包不存在,也可以用下面的Dockerfile进行镜像构建:

FROM harbor.od.com/public/jenkins:v2.263.1 USER root RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \ echo 'Asia/Shanghai' > /etc/timezone ADD id_rsa /root/.ssh/id_rsa ADD config.json /root/.docker/config.json RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config RUN apt-get update > /dev/null && apt install -y ca-certificates \ curl \ gnupg \ apt-transport-https \ lsb-release RUN DEBIAN_FRONTEND=noninteractive curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg RUN echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian \ $(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list RUN apt-get update > /dev/null && apt-get install -y docker-ce docker-ce-cli containerd.io

命名空间与共享存储

-

到21机器,创建命名空间,对应私有化仓库:

[root@hdss7-21 ~]# kubectl create ns infra namespace/infra created [root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infrakuberctl create secret创建私有仓库,对应参数分别是仓库地址、用户名、密码、仓库名称infra。

-

21/22/200机器配置nfs共享存储

[root@hdss7-200 jenkins]# yum install nfs-utils -y # 200机器,做nfs共享存储的客户端 [root@hdss7-200 jenkins]# vi /etc/exports /data/nfs-volume 10.4.7.0/24(rw,no_root_squash)[root@hdss7-200 jenkins]# mkdir /data/nfs-volume [root@hdss7-200 jenkins]# mkdir /data/nfs-volume/jenkins_home [root@hdss7-200 jenkins]# systemctl start nfs [root@hdss7-200 jenkins]# systemctl enable nfs -

进入200机器,创建

/data/k8s-yaml/jenkins目录,准备资源配置清单文件,kubectl api-resources |grep deployment可以查看资源配置类型的apiVersion:dp.yaml

kind: Deployment apiVersion: apps/v1 metadata: name: jenkins namespace: infra labels: name: jenkins spec: replicas: 1 selector: matchLabels: name: jenkins template: metadata: labels: app: jenkins name: jenkins spec: volumes: - name: data nfs: server: hdss7-200 path: /data/nfs-volume/jenkins_home - name: docker hostPath: path: /run/docker.sock type: '' containers: - name: jenkins image: harbor.od.com/infra/jenkins:v2.263.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 protocol: TCP env: - name: JAVA_OPTS value: -Xmx512m -Xms512m volumeMounts: - name: data mountPath: /var/jenkins_home - name: docker mountPath: /run/docker.sock imagePullSecrets: - name: harbor securityContext: runAsUser: 0 strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600svc.yaml

kind: Service apiVersion: v1 metadata: name: jenkins namespace: infra spec: ports: - protocol: TCP port: 80 targetPort: 8080 selector: app: jenkinsingress.yaml

kind: Ingress apiVersion: extensions/v1beta1 metadata: name: jenkins namespace: infra spec: rules: - host: jenkins.od.com http: paths: - path: / backend: serviceName: jenkins servicePort: 80 -

在21机器上应用资源配置清单:

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/dp.yaml [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/svc.yaml [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/ingress.yaml[root@hdss7-21 ~]# kubectl get pods -n infra NAME READY STATUS RESTARTS AGE jenkins-546fbb867c-992l5 1/1 Running 0 3m27s [root@hdss7-21 ~]# kubectl get all -n infra NAME READY STATUS RESTARTS AGE pod/jenkins-546fbb867c-992l5 1/1 Running 0 3m35s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/jenkins ClusterIP 192.168.187.251 <none> 80/TCP 62s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/jenkins 1/1 1 1 3m35s NAME DESIRED CURRENT READY AGE replicaset.apps/jenkins-546fbb867c 1 1 1 3m35s -

11机器配置域名解析,serial前滚一位并添加下面的内容:

# 2021121007 ; serial 前滚 jenkins A 10.4.7.10[root@hdss7-11 ~]# systemctl restart named [root@hdss7-11 ~]# dig -t A jenkins.od.com @10.4.7.11 +short 10.4.7.10

Jenkins配置

访问jenkins.od.com,首次访问需要输入初始密码,可以到200机器的/data/nfs-volume/jenkins_home下,查看secrets/initialAdminPassword文件获取初始密码。

-

跳过插件安装,配置管理员账号:

# jenkins账号密码设置,一定要跟我的一样,后面要用到的: 账号:admin 密码:admin123 full name:admin # 然后save->save->start using Jenkins即可 -

调整安全配置,进入

Manage Jenkins>Configure Global Security菜单,调整下面两个安全选项:allow anonymous read acces 允许匿名用户访问(勾选) Prevent cross site request forgery exploits 允许跨域(不勾选)

安装蓝海插件

-

蓝海插件可以从仪表板到各个Pipeline运行的查看分支和结果,使用可视编辑器修改Pipeline作为代码,连续交付(CD)Pipeline的复杂可视化,允许快速和直观地了解Pipeline的状态(下面回顾构建镜像流程的时候有使用到),进入

Manage Plugins菜单,点击Avaliable,没有内容的话点击check out刷新,然后ctrl+f查找Blue Ocean,勾选之后开始安装:

-

安装完成后,在主菜单可以看到blue ocean菜单:

Maven安装

maven是一个项目管理工具,可以对JAVA项目进行构建、依赖管理。可以到官网下载对应版本的maven,这里使用3.6.1版本。

-

到dashboard或命令行,进入jenkins容器,查看java版本:

-

在200机器的jenkins nfs目录内创建maven目录:

# 目录名的8u242是根据上图java版本号确定的 [root@hdss7-200 src]# mkdir /data/nfs-volume/jenkins_home/maven-3.6.1-8u242 -

在jenkins容器内确认能够登录harbor仓库和git仓库:

# 进入harbo docker login harbor.od.com # 是否能连接gitee ssh -i /root/.ssh/id_rsa -T git@gitee.com

-

解压maven到指定目录:

[root@hdss7-200 src]# tar zxvf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u242/ [root@hdss7-200 src]# cd !$ cd /data/nfs-volume/jenkins_home/maven-3.6.1-8u242/ [root@hdss7-200 maven-3.6.1-8u242]# ls apache-maven-3.6.1 [root@hdss7-200 maven-3.6.1-8u242]# mv apache-maven-3.6.1/ ../ [root@hdss7-200 maven-3.6.1-8u242]# mv ../apache-maven-3.6.1/* . [root@hdss7-200 maven-3.6.1-8u242]# ll 总用量 28 drwxr-xr-x 2 root root 97 2月 22 16:15 bin drwxr-xr-x 2 root root 42 2月 22 16:15 boot drwxr-xr-x 3 501 games 63 4月 5 2019 conf drwxr-xr-x 4 501 games 4096 2月 22 16:15 lib -rw-r--r-- 1 501 games 13437 4月 5 2019 LICENSE -rw-r--r-- 1 501 games 182 4月 5 2019 NOTICE -rw-r--r-- 1 501 games 2533 4月 5 2019 README.txt -

修改

conf/settings.xml文件内容,将其中的镜像源改为阿里源:<mirror> <id>nexus-aliyun</id> <mirrorOf>*</mirrorOf> <name>Nexus aliyun</name> <url>https://maven.aliyun.com/repository/public</url> <!-- 旧地址:http://maven.allyun.com/nexus/content/groups/public --> </mirror>

dubbo镜像制作

-

到200机器,拉取jre镜像:

[root@hdss7-200 jenkins_home]# docker pull docker.io/909336740/jre8:8u112 [root@hdss7-200 jenkins_home]# docker images|grep jre [root@hdss7-200 jenkins_home]# docker tag fa3a085d6ef1 harbor.od.com/public/jre:8u112 [root@hdss7-200 jenkins_home]# docker push !$ -

创建

/data/dockerfile/jre8目录,创建Dockerfile文件,内容如下:FROM harbor.od.com/public/jre:8u112 RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \ echo 'Asia/Shanghai' > /etc/timezone ADD config.yml /opt/prom/config.yml ADD jmx_javaagent-0.3.1.jar /opt/prom/ WORKDIR /opt/project_dir ADD entrypoint.sh /entrypoint.sh CMD ["/entrypoint.sh"] -

下载和创建所需文件:

# 下载javaagent-0.3.1.jar wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jarconfig.yml

--- rules: - pattern: '.*'entrypoint.sh

#!/bin/sh M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml" C_OPTS=${C_OPTS} JAR_BALL=${JAR_BALL} exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}[root@hdss7-200 jre8]# chmod +x entrypoint.sh [root@hdss7-200 jre8]# ll 总用量 368 -rw-r--r-- 1 root root 29 2月 22 16:48 config.yml -rwxr-xr-x 1 root root 236 2月 22 16:48 entrypoint.sh -rw-r--r-- 1 root root 367417 5月 10 2018 jmx_javaagent-0.3.1.jar -

harbor创建base仓库:

-

200机器,开始build镜像:

[root@hdss7-200 jre8]# docker build . -t harbor.od.com/base/jre8:8u112 [root@hdss7-200 jre8]# docker push !$ docker push harbor.od.com/base/jre8:8u112

dubbo持续交付构建

Jenkins pipeline构建

-

jenkins按照下图顺序创建pipeline:

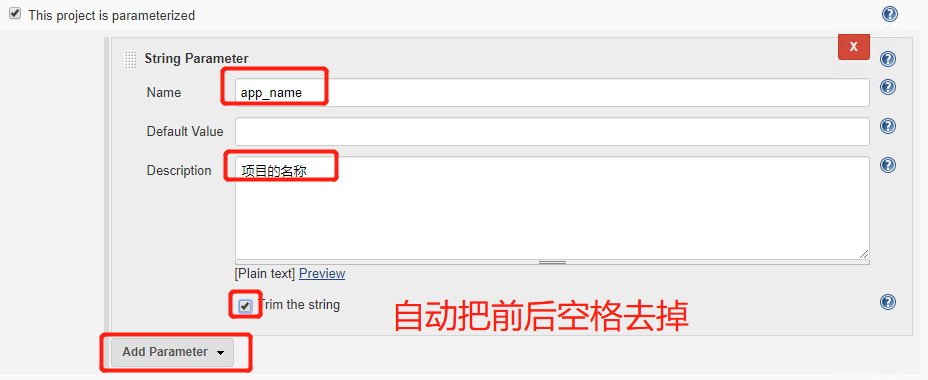

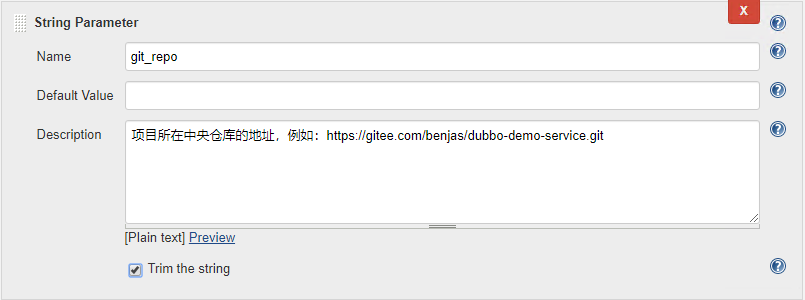

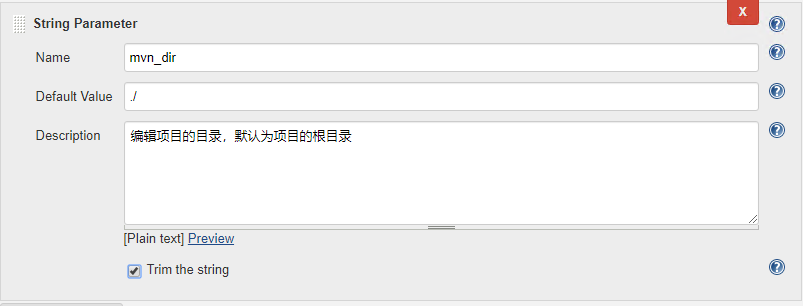

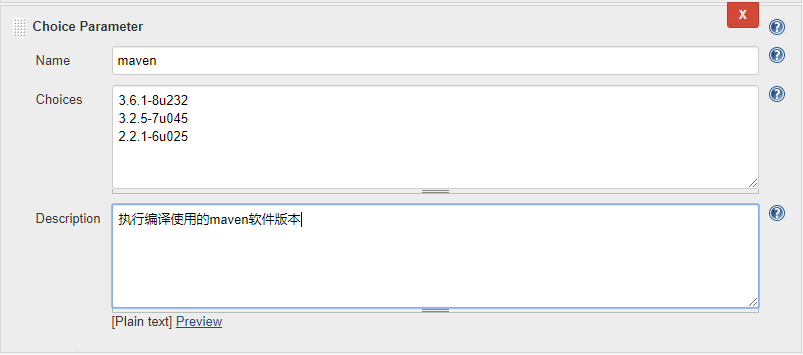

构建10个参数:

-

把以下内容填写下面的Adnanced Project Options:

pipeline { agent any stages { stage('pull') { //get project code from repo steps { sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}" } } stage('build') { //exec mvn cmd steps { sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}" } } stage('package') { //move jar file into project_dir steps { sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir" } } stage('image') { //build image and push to registry steps { writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image} ADD ${params.target_dir}/project_dir /opt/project_dir""" sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}" } } } }

注释(流水线脚本):

pull: 把项目克隆到仓库

build: 到指定的地方创建

package: 用完mvn后打包到project_dir

image: 弄到我们的docker仓库

执行构建

-

harbor创建app项目;

-

点击build with Parameters,填入对应的参数:

填入对应的参数: app_name: dubbo-demo-service image_name: app/dubbo-demo-service git_repo: git@gitee.com:evobot/dubbo-demo-web.git git_ver: master add_tag: 202202_1716 mvn_dir: ./ target_dir: ./dubbo-server/target mvn_cmd: mvn clean package -Dmaven.test.skip=true base_image: base/jre8:8u112 maven: 3.6.1-8u232 # 注意看脚注,点击Build进行构建,等待构建完成。

-

执行build:

-

查看harbor仓库:

dubbo交付

-

在200服务器,创建

/data/k8s-yaml/dubbo-demo-service目录,创建对应的资源清单文件:dp.yaml

kind: Deployment apiVersion: apps/v1 metadata: name: dubbo-demo-service namespace: app labels: name: dubbo-demo-service spec: replicas: 1 selector: matchLabels: name: dubbo-demo-service template: metadata: labels: app: dubbo-demo-service name: dubbo-demo-service spec: containers: - name: dubbo-demo-service image: harbor.od.com/app/dubbo-demo-service:master_202202_2014 ports: - containerPort: 20880 protocol: TCP env: - name: JAR_BALL value: dubbo-server.jar imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600 -

21机器创建命名空间和harbor secret:

[root@hdss7-21 ~]# kubectl create ns app [root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n app -

到11机器查看zookeeper状态:

[root@hdss7-11 ~]# cd /opt/zookeeper [root@hdss7-11 zookeeper]# bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/zookeeper/bin/../conf/zoo.cfg Mode: follower # 连接到zk,里面目前只有zk,还没有dubbo [root@hdss7-11 zookeeper]# bin/zkCli.sh -server localhost:2181 [zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] -

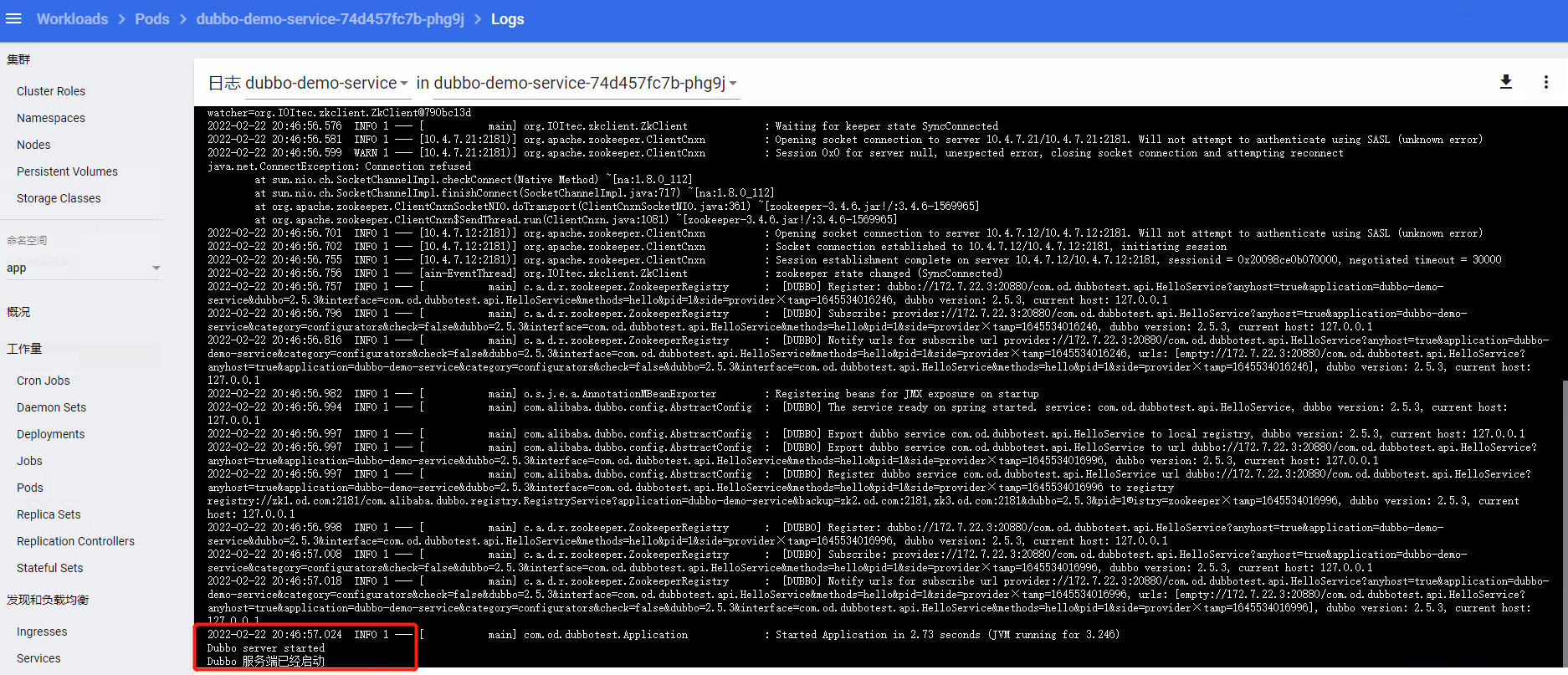

22机器,应用dubbo资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/dp.yaml deployment.apps/dubbo-demo-service created -

查看dubbo的pod:

-

到11机器查看zk,已经有了dubbo,此时dubbo服务已经注册到了了ZK交付中心,项目交付成功:

[zk: localhost:2181(CONNECTED) 2] ls / [dubbo, zookeeper] [zk: localhost:2181(CONNECTED) 3]

BlueOcean插件回顾流水线构建

-

打开BlueOcean:

-

点击构建成功的编号:

-

查看具体步骤,jenkins流水线构建就这5步:

拉取代码——》编译代码——》到指定目录打包jar——》构建镜像——》结束

交付dubbo-monitor

为了方便查看zk注册情况,需要一个图形化界面来查看,也就是dubbo-monitor。

-

到200机器,下载dubbo monitor安装包:

[root@hdss7-200 dubbo-demo-service]# cd /opt/src/ [root@hdss7-200 src]# wget https://github.com/Jeromefromcn/dubbo-monitor/archive/master.zip [root@hdss7-200 src]# unzip master.zip [root@hdss7-200 src]# mv dubbo-monitor-master/ /opt/src/dubbo-monitor -

修改dubbo monitor配置,编辑

dubbo-monitor/dubbo-monitor-simple/conf/dubbo_origin.properties:dubbo.container=log4j,spring,registry,jetty dubbo.application.name=dubbo-monitor dubbo.application.owner=evobot dubbo.registry.address=zookeeper://zk1.od.com:2181?backup=zk2.od.com:2181,zk3.od.com:2181 dubbo.protocol.port=20880 dubbo.jetty.port=8080 dubbo.jetty.directory=/dubbo-monitor-simple/monitor dubbo.charts.directory=/dubbo-monitor-simple/charts dubbo.statistics.directory=/dubbo-monitor-simple/statistics dubbo.log4j.file=logs/dubbo-monitor-simple.log dubbo.log4j.level=WARN -

修改

dubbo-monitor/dubbo-monitor-simple/bin/start.sh,修改为下面的内容:if [ -n "$BITS" ]; then JAVA_MEM_OPTS=" -server -Xmx128m -Xms128m -Xmn32m -XX:PermSize=16m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 " else JAVA_MEM_OPTS=" -server -Xms128m -Xmx128m -XX:PermSize=16m -XX:SurvivorRatio=2 -XX:+UseParallelGC " fi echo -e "Starting the $SERVER_NAME ...\c" exec java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1 # 原脚本下面的内容全部删除 -

200机器,构建镜像:

[root@hdss7-200 src]# cp -a dubbo-monitor/ /data/dockerfile/ [root@hdss7-200 src]# cd /data/dockerfile/dubbo-monitor/ [root@hdss7-200 dubbo-monitor]# docker build . -t harbor.od.com/infra/dubbo-monitor:latest [root@hdss7-200 dubbo-monitor]# docker push !$ -

200机器,创建

/data/k8s-yaml/dubbo-monitor目录,编辑资源清单:dp.yaml

kind: Deployment apiVersion: apps/v1 metadata: name: dubbo-monitor namespace: infra labels: name: dubbo-monitor spec: replicas: 1 selector: matchLabels: name: dubbo-monitor template: metadata: labels: app: dubbo-monitor name: dubbo-monitor spec: containers: - name: dubbo-monitor image: harbor.od.com/infra/dubbo-monitor:latest ports: - containerPort: 8080 protocol: TCP - containerPort: 20880 protocol: TCP imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600svc.yaml

kind: Service apiVersion: v1 metadata: name: dubbo-monitor namespace: infra spec: ports: - protocol: TCP port: 8080 targetPort: 8080 selector: app: dubbo-monitoringress.yaml

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: dubbo-monitor namespace: infra spec: rules: - host: dubbo-monitor.od.com http: paths: - path: / backend: serviceName: dubbo-monitor servicePort: 8080 -

11机器,域名配置,serial前滚,增加

dubbo-monitor A 10.4.7.10:

-

22机器,应用资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml deployment.apps/dubbo-monitor created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/svc.yaml service/dubbo-monitor created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/ingress.yaml ingress.extensions/dubbo-monitor created -

访问dubbo-monitor.od.com,正常访问即交付完成:

交付dubbo服务消费者

-

切换到dubbo client分支,登录Jenkins,重新填入构建参数,参数如下:

# 填入指定参数 app_name: dubbo-demo-consumer image_name: app/dubbo-demo-consumer git_repo: git@gitee.com:evobot/dubbo-demo-web.git git_ver: client add_tag: 220223_2016 mvn_dir: ./ target_dir: ./dubbo-client/target mvn_cmd: mvn clean package -e -q -Dmaven.test.skip=true base_image: base/jre8:8u112 maven: 3.6.1-8u232 # 点击Build进行构建,等待构建完成,mvn_cmd 里的 -e -q是让输出输出的多点,可以看里面的内容 -

构建成功后,harbor内可以看到新的镜像:

-

200机器,创建

/data/k8s-yaml/dubbo-demo-consumer准备资源配置清单:dp.yaml

kind: Deployment apiVersion: apps/v1 metadata: name: dubbo-demo-consumer namespace: app labels: name: dubbo-demo-consumer spec: replicas: 1 selector: matchLabels: name: dubbo-demo-consumer template: metadata: labels: app: dubbo-demo-consumer name: dubbo-demo-consumer spec: containers: - name: dubbo-demo-consumer image: harbor.od.com/app/dubbo-demo-consumer:client_220223_2016 ports: - containerPort: 8080 protocol: TCP - containerPort: 20880 protocol: TCP env: - name: JAR_BALL value: dubbo-client.jar imagePullPolicy: IfNotPresent imagePullSecrets: - name: harbor restartPolicy: Always terminationGracePeriodSeconds: 30 securityContext: runAsUser: 0 schedulerName: default-scheduler strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 revisionHistoryLimit: 7 progressDeadlineSeconds: 600svc.yaml

kind: Service apiVersion: v1 metadata: name: dubbo-demo-consumer namespace: app spec: ports: - protocol: TCP port: 8080 targetPort: 8080 selector: app: dubbo-demo-consumeringress.yaml

kind: Ingress apiVersion: extensions/v1beta1 metadata: name: dubbo-demo-consumer namespace: app spec: rules: - host: demo.od.com http: paths: - path: / backend: serviceName: dubbo-demo-consumer servicePort: 8080 -

11机器解析域名:

# serial前滚一位 2021121009 ; serial demo A 10.4.7.10 -

node节点应用资源清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/dp.yaml deployment.apps/dubbo-demo-consumer created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/svc.yaml service/dubbo-demo-consumer created [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/ingress.ya ml ingress.extensions/dubbo-demo-consumer created -

刷新dubbo-monitor页面:

-

在浏览器访问

http://demo.od.com/hello?name=evobot,出现以下页面则交付成功:

-

dubbo最重要的是能够软负载均衡及扩容,也就是可以随意扩容后端和前端,在dashboard中将dubbo-demo-service deployment的副本增加到3个,把消费者pod改为2个:

-

同样的操作也可以随时进行缩容,当访问量高时扩容,访问量少时缩容;

dubbo集群日常维护

在日常维护中,肯定会有代码迭代的情况,下面模拟代码更新时的迭代维护。

-

进入dubbo-demo-web的client分支,修改

dubbo-client/src/main/java/com/od/dubbotest/action目录下的HelloAction.java文件中的版本号为0.2如下:public class HelloAction { @Reference HelloService helloService; @RequestMapping public String say(String name) { System.out.println("HelloAction接收到请求:"+name); String str="<h1>这是Dubbo 消费者端(springboot)</h1>"; str+="<h2>版本更新 v0.2</h2>"; str+=helloService.hello(name); System.out.println("HelloService返回到结果:"+str); return str; } } -

然后提交更改到仓库:

[root@hdss7-200 action]# git add . [root@hdss7-200 action]# git status # 位于分支 client # 要提交的变更: # (使用 "git reset HEAD <file>..." 撤出暂存区) # # 修改: HelloAction.java # [root@hdss7-200 action]# git commit -m 'v0.2' [client 4ff957e] v0.2 1 file changed, 1 insertion(+), 1 deletion(-) [root@hdss7-200 action]# git push -

获取提交的tag:

-

jenkins中填入构建参数:

# 填入指定参数,git_repo中的-b client表示clone指定分支 app_name: dubbo-demo-consumer image_name: app/dubbo-demo-consumer git_repo: -b client git@gitee.com:evobot/dubbo-demo-web.git git_ver: 4ff957ed4ae8e5a378ad765dae7d44035b366646 add_tag: 2202023_2125 mvn_dir: ./ target_dir: ./dubbo-client/target mvn_cmd: mvn clean package -e -q -Dmaven.test.skip=true base_image: base/jre8:8u112 maven: 3.6.1-8u232 # 点击Build进行构建,等待构建完成,mvn_cmd 里的 -e -q是让输出输出的多点,可以看里面的内容 -

build完成后,harbor中可以看到新的镜像:

-

然后到dashboard修改dubbo-demo-consumer的yaml文件,将其中的镜像名改为最新的镜像:

-

更新之后,再次访问http://demo.od.com/helle?name=evobot,查看版本变化,这样就完成了版本的迭代:

K8S集群毁灭测试

节点宕机

-

将consumer和service扩容到2个,查看pod所在节点:

[root@hdss7-21 ~]# kubectl get pod -n app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dubbo-demo-consumer-5567b64d55-5zztx 1/1 Running 0 5m52s 172.7.21.5 hdss7-21.host.com <none> <none> dubbo-demo-consumer-5567b64d55-vhwnc 1/1 Running 0 27m 172.7.22.7 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-vtpm6 1/1 Running 0 4h38m 172.7.22.5 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-w86tr 1/1 Running 0 5m47s 172.7.21.6 hdss7-21.host.com <none> <none>每个节点上有2个pod

-

模拟一个节点服务器宕机,关闭21机器,然后会发现dashboard和demo网址都已经无法访问,接着删除离线主机(不删除的话,k8s会认为是网络抖动等问题,会尝试不断重连节点):

[root@hdss7-22 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION hdss7-21.host.com NotReady <none> 5h38m v1.16.10 hdss7-22.host.com Ready <none> 5h42m v1.16.10 [root@hdss7-22 ~]# kubectl delete nodes hdss7-21.host.com node "hdss7-21.host.com" deleted -

删除后,k8s的自愈机制会把pod在22节点重新创建,这时候再去访问demo和dashboard就可以正常访问到:

[root@hdss7-22 ~]# kubectl get pod -n app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dubbo-demo-consumer-5567b64d55-hxrfj 1/1 Running 0 2m15s 172.7.22.10 hdss7-22.host.com <none> <none> dubbo-demo-consumer-5567b64d55-vhwnc 1/1 Running 0 33m 172.7.22.7 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-vbhjg 1/1 Running 0 2m15s 172.7.22.9 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-vtpm6 1/1 Running 0 4h44m 172.7.22.5 hdss7-22.host.com <none> <none> -

到11和12机器,将nginx中的21节点注释掉,然后重启nginx,这样就完成了服务器节点宕机的应急处理。

集群恢复

-

重启21机器,查看服务状态,重新加入node节点:

[root@hdss7-21 ~]# supervisorctl status etcd-server-7-21 RUNNING pid 959, uptime 0:00:38 flanneld-7-21 RUNNING pid 951, uptime 0:00:38 kube-apiserver-7-21 RUNNING pid 954, uptime 0:00:38 kube-controller-manager-7-21 RUNNING pid 956, uptime 0:00:38 kube-kubelet-7-21 RUNNING pid 950, uptime 0:00:38 kube-proxy-7-21 RUNNING pid 957, uptime 0:00:38 kube-scheduler-7-21 RUNNING pid 953, uptime 0:00:38 [root@hdss7-21 ~]# kubectl label nodes hdss7-21.host.com node-role.kubernetes.io/master= node/hdss7-21.host.com labeled [root@hdss7-21 ~]# kubectl label nodes hdss7-21.host.com node-role.kubernetes.io/node= node/hdss7-21.host.com labeled [root@hdss7-21 ~]# kubectl get node NAME STATUS ROLES AGE VERSION hdss7-21.host.com Ready master,node 72s v1.16.10 hdss7-22.host.com Ready master,node 5h52m v1.16.10 -

修改11/12的nginx,将21的注释取消,查看pod所在机器:

[root@hdss7-21 ~]# kubectl get pod -n app -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dubbo-demo-consumer-5567b64d55-hxrfj 1/1 Running 0 10m 172.7.22.10 hdss7-22.host.com <none> <none> dubbo-demo-consumer-5567b64d55-vhwnc 1/1 Running 0 41m 172.7.22.7 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-vbhjg 1/1 Running 0 10m 172.7.22.9 hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-vtpm6 1/1 Running 0 4h52m 172.7.22.5 hdss7-22.host.com <none> <none>可以看到pod仍然在22机器上,接下来做一下调度,让资源使用平衡。

-

删除一个consumer和service:

[root@hdss7-21 ~]# kubectl delete pod dubbo-demo-consumer-5567b64d55-hxrfj -n app pod "dubbo-demo-consumer-5567b64d55-hxrfj" deleted [root@hdss7-21 ~]# kubectl delete pod dubbo-demo-service-74d457fc7b-vbhjg -n app pod "dubbo-demo-service-74d457fc7b-vbhjg" deleted -

再查看pod情况,已经分别在两个node上了:

[root@hdss7-21 ~]# kubectl get pod -n app -o wide NAME READY STATUS RESTARTS AGE IPNODE NOMINATED NODE READINESS GATES dubbo-demo-consumer-5567b64d55-jcwbx 1/1 Running 0 86s 172.7.21.3hdss7-21.host.com <none> <none> dubbo-demo-consumer-5567b64d55-vhwnc 1/1 Running 0 44m 172.7.22.7hdss7-22.host.com <none> <none> dubbo-demo-service-74d457fc7b-j9lrt 1/1 Running 0 56s 172.7.21.4hdss7-21.host.com <none> <none> dubbo-demo-service-74d457fc7b-vtpm6 1/1 Running 0 4h55m 172.7.22.5hdss7-22.host.com <none> <none> -

21机器查看iptables规则:

[root@hdss7-21 ~]# iptables-save |grep -i postrouting :POSTROUTING ACCEPT [1:76] :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE -A POSTROUTING -s 172.7.21.2/32 -d 172.7.21.2/32 -p tcp -m tcp --dport 80 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE # 确实172.7.0.0/16,删除规则 [root@hdss7-21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE # 增加172.7.0.0/16规则 [root@hdss7-21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE [root@hdss7-21 ~]# iptables-save |grep -i postrouting :POSTROUTING ACCEPT [0:0] :KUBE-POSTROUTING - [0:0] -A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A POSTROUTING -s 172.7.21.2/32 -d 172.7.21.2/32 -p tcp -m tcp --dport 80 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE