K8S管理命令

基础操作

-

配置k8s命令补全,使用yum安装

bash-completion软件包;然后执行下面的命令:source /usr/share/bash-completion/bash_completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc -

管理命名空间,使用命令

kubectl get namespace或kubectl get ns:[root@hdss7-22 ~]# kubectl get namespace NAME STATUS AGE default Active 54d kube-node-lease Active 54d kube-public Active 54d kube-system Active 54d [root@hdss7-22 ~]# kubectl get ns NAME STATUS AGE default Active 54d kube-node-lease Active 54d kube-public Active 54d kube-system Active 54d -

列出所有不同的资源对象,如pod,service,daemonset,使用命令

kubectl get all:[root@hdss7-22 ~]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-ds-bw2k9 1/1 Running 0 22h pod/nginx-ds-x2j7p 1/1 Running 0 22h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 54d NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/nginx-ds 2 2 2 2 2 <none> 22h -

命名空间的增加和删除:

- 增加命名空间,

kubectl create ns app:

[root@hdss7-22 ~]# kubectl create ns app namespace/app created [root@hdss7-22 ~]# kubectl get ns NAME STATUS AGE app Active 4s default Active 54d kube-node-lease Active 54d kube-public Active 54d kube-system Active 54d- 删除命名空间,

kubectl delete ns app:

[root@hdss7-22 ~]# kubectl delete ns app namespace "app" deleted [root@hdss7-22 ~]# kubectl get ns NAME STATUS AGE default Active 54d kube-node-lease Active 54d kube-public Active 54d kube-system Active 54d - 增加命名空间,

-

deployment资源创建,使用

kubectl create deployment命令:[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.21 -n kube-public deployment.apps/nginx-dp created [root@hdss7-21 ~]# kubectl get deployment -n kube-public NAME READY UP-TO-DATE AVAILABLE AGE nginx-dp 1/1 1 1 22s -

查看指定命名空间资源:

# 查看指定命名空间内的depolyment kubectl get deploy -n kube-public # 查看指定命名空间内的pod kubectl get pods -o wide -n kube-public # 查看指定命名空间内的deployment详细描述 kubectl describe deployment nginx-dp -n kube-public -

进入pod资源:

# 查看pod的name kubectl get pods -n kube-public # 进入pod资源 kubectl exec -it nginx-dp-7d995b4bd5-dk2dk bash -n kube-public[root@hdss7-22 ~]# kubectl get pods -n kube-public NAME READY STATUS RESTARTS AGE nginx-dp-7d995b4bd5-dk2dk 1/1 Running 0 5m32s [root@hdss7-22 ~]# kubectl exec -it nginx-dp-7d995b4bd5-dk2dk bash -n kube-public root@nginx-dp-7d995b4bd5-dk2dk:/# -

删除pod资源,由于pod控制器预期pod的数目有一个,所以pod被删掉,k8s就会自动重启一个新的pod,使用

--force --grace-period=0表示强制删除:# 删除pod kubectl delete pod nginx-dp-7d995b4bd5-dk2dk -n kube-public # 删除deploy kubectl delete deployment nginx-dp -n kube-public[root@hdss7-22 ~]# kubectl delete pod nginx-dp-7d995b4bd5-dk2dk -n kube-public pod "nginx-dp-7d995b4bd5-dk2dk" deleted [root@hdss7-22 ~]# kubectl get pod -n kube-public NAME READY STATUS RESTARTS AGE nginx-dp-7d995b4bd5-wp99v 1/1 Running 0 10s [root@hdss7-22 ~]# kubectl delete deployment nginx-dp -n kube-public deployment.extensions "nginx-dp" deleted [root@hdss7-22 ~]# kubectl get deployment -n kube-public No resources found. [root@hdss7-22 ~]# kubectl get all -n kube-public No resources found.

service资源管理

-

首先在22机器上创建deployment:

[root@hdss7-22 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.21 -n kube-public deployment.apps/nginx-dp created [root@hdss7-22 ~]# kubectl get all -n kube-public NAME READY STATUS RESTARTS AGE pod/nginx-dp-7d995b4bd5-qrkft 1/1 Running 0 8s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-dp 1/1 1 1 8s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-dp-7d995b4bd5 1 1 1 8s -

为deployment暴露端口:

# 为指定deploy暴露端口 kubectl expose deployment nginx-dp --port=80 -n kube-public

-

然后在22机器上curl访问:

[root@hdss7-22 ~]# curl 192.168.28.186 # ipvsadm可以看到IP的对应关系 [root@hdss7-22 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.0.1:443 nq -> 10.4.7.21:6443 Masq 1 0 0 -> 10.4.7.22:6443 Masq 1 0 0 TCP 192.168.28.186:80 nq -> 172.7.22.3:80 Masq 1 0 1 -

pod副本扩容,使用

scale选项,指定--replicas参数将pod扩容为2个:# 指定deploy扩容 kubectl scale deployment nginx-dp --replicas=2 -n kube-public[root@hdss7-21 ~]# kubectl scale deployment nginx-dp --replicas=2 -n kube-public deployment.extensions/nginx-dp scaled [root@hdss7-21 ~]# kubectl get pod -n kube-public NAME READY STATUS RESTARTS AGE nginx-dp-7d995b4bd5-qrkft 1/1 Running 0 50m nginx-dp-7d995b4bd5-th982 1/1 Running 0 68s # 可以看到前端的Cluster-ip不变,但对应的后端IP增加了 [root@hdss7-21 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.0.1:443 nq -> 10.4.7.21:6443 Masq 1 0 0 -> 10.4.7.22:6443 Masq 1 0 0 TCP 192.168.28.186:80 nq -> 172.7.21.4:80 Masq 1 0 0 -> 172.7.22.3:80 Masq 1 0 0 -

获取资源配置清单:

# 获取pod信息 kubectl get pods -n kube-public # 获取资源清单 kubectl get pods nginx-dp-7d995b4bd5-qrkft -o yaml -n kube-public # 查询参数用法 kubectl explain service.metadata -

使用声明文件创建service,创建并编辑

nginx-ds-svc.yaml文件:apiVersion: v1 kind: Service metadata: labels: app: nginx-ds name: nginx-ds namespace: default spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx-ds sessionAffinity: None type: ClusterIP -

然后创建service:

[root@hdss7-21 ~]# kubectl create -f nginx-ds-svc.yaml service/nginx-ds created [root@hdss7-21 ~]# kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 54d nginx-ds ClusterIP 192.168.176.30 <none> 80/TCP 7s -

修改和删除:

# 在线方式修改资源 kubectl edit svc nginx-ds kubectl get svc # 陈述式 kubectl delete -f nginx-ds # 声明式 kubectl delete -f nginx-dp-svc.yaml

Flanneld部署

Flannel主要用于实现集群里的宿主机、容器之间的互通,Flannel通过给每台宿主机分配一个子网的方式为容器提供虚拟网络,它基于Linux TUN/TAP,使用UDP/VXLAN封装IP包来创建overlay网络,并借助etcd(也支持kubernetes)维护网络的分配情况。

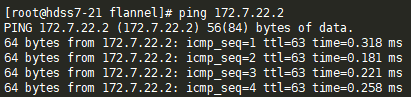

比如,我们尝试ping容器的IP地址:

[root@hdss7-21 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-bg22c 1/1 Running 0 20s 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-w96gb 1/1 Running 0 20s 172.7.21.2 hdss7-21.host.com <none> <none>

[root@hdss7-21 ~]# ping 172.7.21.2

PING 172.7.21.2 (172.7.21.2) 56(84) bytes of data.

64 bytes from 172.7.21.2: icmp_seq=1 ttl=64 time=0.080 ms

64 bytes from 172.7.21.2: icmp_seq=2 ttl=64 time=0.042 ms

^C

--- 172.7.21.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.042/0.061/0.080/0.019 ms

[root@hdss7-21 ~]# ping 172.7.22.2

PING 172.7.22.2 (172.7.22.2) 56(84) bytes of data.

^C

--- 172.7.22.2 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1000ms

可以看到,两个容器的宿主机之间是不能够通信的,所以就需要使用CNI网络插件,CNI主要用来实现POD资源跨宿主机通信,CNI网络插件除了Flannel外,还有Calico等。

安装Flannel

-

在21/22机器上,进入

/opt/src目录,下载flannel安装包,这里使用flannel v0.11.0版本;[root@hdss7-21 ~]# cd /opt/src/ [root@hdss7-21 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz -

解压安装:

[root@hdss7-22 src]# tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/ [root@hdss7-22 src]# ln -s /opt/flannel-v0.11.0/ /opt/flannel [root@hdss7-22 src]# cd /opt/flannel [root@hdss7-22 flannel]# ll 总用量 34436 -rwxr-xr-x 1 root root 35249016 1月 29 2019 flanneld -rwxr-xr-x 1 root root 2139 10月 23 2018 mk-docker-opts.sh -rw-r--r-- 1 root root 4300 10月 23 2018 README.md -

进入flannel目录,下载证书:

[root@hdss7-21 src]# cd /opt/flannel [root@hdss7-21 flannel]# ls flanneld mk-docker-opts.sh README.md [root@hdss7-21 flannel]# mkdir cert [root@hdss7-21 flannel]# cd cert/ [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/ca.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client-key.pem .

配置Flannel

-

21/22机器,进入flannel目录,创建

subnet.env文件,内容根据机器名修改:FLANNEL_NETWORK=172.7.0.0/16 FLANNEL_SUBNET=172.7.21.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false -

21/22创建flannel启动脚本和supervisor守护进程配置文件并编辑,内容根据机器名修改:

flanned.sh

#!/bin/bash ./flanneld \ --public-ip=10.4.7.21 \ --etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \ --etcd-keyfile=./cert/client-key.pem \ --etcd-certfile=./cert/client.pem \ --etcd-cafile=./cert/ca.pem \ --iface=ens33 \ --subnet-file=./subnet.env \ --healthz-port=2401flannel.ini

[program:flanneld-7-21] command=/opt/flannel/flanneld.sh numprocs=1 directory=/opt/flannel autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,2 stopsignal=QUIT stopwaitsecs=10 user=root redirect_stderr=true stdout_logfile=/data/logs/flanneld/flanneld.stdout.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false killasgroup=true stopasgroup=true其他配置:

[root@hdss7-22 flannel]# chmod +x flanneld.sh [root@hdss7-22 flannel]# mkdir -p /data/logs/flanneld -

在21或者22其中一台机器上执行下面的命令:

[root@hdss7-22 flannel]# cd /opt/etcd [root@hdss7-22 etcd]# ./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}' [root@hdss7-22 etcd]# ./etcdctl get /coreos.com/network/config {"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}} -

21/22重载supervisor配置,开启转发:

[root@hdss7-21 flannel]# supervisorctl update flanneld-7-21: added process group [root@hdss7-21 flannel]# supervisorctl status etcd-server-7-21 RUNNING pid 1040, uptime 28 days, 1:28:05 flanneld-7-21 RUNNING pid 82394, uptime 0:07:59 kube-apiserver-7-21 RUNNING pid 1377, uptime 28 days, 1:27:14 kube-controller-manager-7-21 RUNNING pid 100659, uptime 11:49:45 kube-kubelet-7-21 RUNNING pid 108509, uptime 3 days, 2:18:02 kube-proxy-7-21 RUNNING pid 74645, uptime 2 days, 2:22:07 kube-scheduler-7-21 RUNNING pid 100652, uptime 11:49:45 # 开启转发,不开启IP无法ping通 [root@hdss7-21 flannel]# echo "1" > /proc/sys/net/ipv4/ip_forward [root@hdss7-21 flannel]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf -

然后在两台node上相互ping对方的pod,都可以ping通,则配置成功:

-

flannel为在同一网关下的机器添加静态路由,利用宿主机IP互通的前提,转发数据包形成互通:

flannel的SNAT规则优化

SNAT规则优化是为了解决两台宿主机之间的透明访问,如果不优化的话,容器之间的访问,日志记录的将是宿主机的IP地址。

优化前日志IP

-

21机器,拉取nginx:curl镜像,然后修改nginx-ds.yaml文件,将镜像改为nginx:curl:

apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: nginx-ds spec: template: metadata: labels: app: nginx-ds spec: containers: - name: my-nginx image: harbor.od.com/public/nginx-curl ports: - containerPort: 80 -

应用新的yaml文件,然后删除两个pod,让其自动重启应用新镜像:

[root@hdss7-21 ~]# kubectl apply -f nginx-ds.yaml daemonset.extensions/nginx-ds configured [root@hdss7-21 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-ds-g2sft 0/1 Completed 11 32m nginx-ds-vgn8h 0/1 CrashLoopBackOff 11 32m [root@hdss7-21 ~]# kubectl delete pod nginx-ds-g2sft nginx-ds-vgn8h pod "nginx-ds-g2sft" deleted pod "nginx-ds-vgn8h" deleted [root@hdss7-21 ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ds-b777x 1/1 Running 0 12s 172.7.22.2 hdss7-22.host.com <none> <none> nginx-ds-l6tv5 1/1 Running 0 14s 172.7.21.2 hdss7-21.host.com <none> <none> -

然后在21机器上,通过21的pod去curl 22机器的pod,然后到22的pod查看nginx日志:

# 21 server [root@hdss7-21 ~]# kubectl exec -it nginx-ds-l6tv5 bash root@nginx-ds-l6tv5:/# curl 172.7.22.2 # 22 server [root@hdss7-22 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-ds-b777x 1/1 Running 0 5m22s 172.7.22.2 hdss7-22.host.com <none> <none> nginx-ds-l6tv5 1/1 Running 0 5m24s 172.7.21.2 hdss7-21.host.com <none> <none> [root@hdss7-22 ~]# kubectl logs -f nginx-ds-b777x 10.4.7.21 - - [10/Feb/2022:17:11:29 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"可以看到nginx日志中记录的IP地址是宿主机的IP地址。

Iptables配置

-

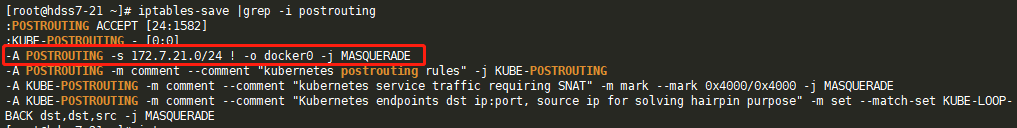

在21机器上,保存iptables规则,并查看postrouting规则:

[root@hdss7-21 ~]# iptables-save |grep -i postrouting :POSTROUTING ACCEPT [24:1582] :KUBE-POSTROUTING - [0:0] -A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

iptables:

语法:iptables [-t 表名] 选项 [链名] [条件] [-j 控制类型]- -A:在规则链的末尾加入新规则

- -s:匹配来源地址IP/MASK,加叹号"!"表示除这个IP外

- -o:匹配从这块网卡流出的数据

- MASQUERADE:动态伪装,能够自动的寻找外网地址并改为当前正确的外网IP地址

- 上面红框内的可以理解为:如果是172.7.21.0/24段的docker的ip,网络发包不从docker0桥设备出战的,就进行SNAT转换,而我们需要的是如果出网的地址是172.7.21.0/24或者172.7.0.0/16网络(这是docker的大网络),就不要做源地址NAT转换,因为我们集群内部需要坦诚相见,自己人不需要伪装。

-

在21/22上安装iptables,修改对应规则:

21 ~]# yum install iptables-services -y 21 ~]# systemctl start iptables 21 ~]# systemctl enable iptables# 删除规则,根据对应机器IP修改命令 21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE # 添加规则,根据对应机器IP修改命令,只有出网地址不是172.7.21.0/24或者172.7.0.0/16,网络发包不从docker0桥设备出战的,才做SNAT转换 21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE# 查看、保存规则: 21 ~]# iptables-save |grep -i postrouting :POSTROUTING ACCEPT [0:0] :KUBE-POSTROUTING - [0:0] -A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE -A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE 21 ~]# iptables-save > /etc/sysconfig/iptables -

再次从21和22机器相互curl对方的pod,如果提示

curl: (7) Failed to connect to 172.7.22.2 port 80: No route to host,则删除filter表里的规则:# 查看filter表的规则 [root@hdss7-21 ~]# iptables-save |grep -i reject -A INPUT -j REJECT --reject-with icmp-host-prohibited -A FORWARD -j REJECT --reject-with icmp-host-prohibited # 删除规则 [root@hdss7-21 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited [root@hdss7-21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited # 保存规则 [root@hdss7-22 ~]# iptables-save > /etc/sysconfig/iptables[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables [root@hdss7-21 ~]# kubectl exec -it nginx-ds-l6tv5 bash root@nginx-ds-l6tv5:/# curl 172.7.22.2 <!DOCTYPE html> # 可以看到日志记录的IP已经变成容器的IP [root@hdss7-22 ~]# kubectl logs nginx-ds-b777x -f 10.4.7.21 - - [10/Feb/2022:17:11:29 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-" 172.7.21.2 - - [11/Feb/2022:07:57:41 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-" 172.7.21.2 - - [11/Feb/2022:07:58:20 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.38.0" "-"

k8s服务插件

coredns部署(服务发现)

coredns用于服务(应用)之间相互定位的过程,服务发现对应着服务的动态性、更新发布频繁,支持自动伸缩场景;

在k8s中,所有的pod都是基于service域名解析后,再负载均衡分发到service后端的各个pod服务中的,pod的ip是不断变化的,所以通过抽象出service资源、通过标签选择器关联一组pod,再抽象出集群网络,通过固定的集群IP,使服务接入点固定;

在前面的部署中,使用bind9实现了传统的DNS解析,在K8S里也可以实现将nginx-ds解析到对应的cluster-ip。

在下面的部署中,将使用交付容器的方式交付服务。

-

进入200机器的nginx虚拟主机配置目录,新建

k8s-yaml.od.com.conf配置,内容如下:server { listen 80; server_name k8s-yaml.od.com; location / { autoindex on; default_type text/plain; root /data/k8s-yaml; } }[root@hdss7-200 conf.d]# mkdir /data/k8s-yaml [root@hdss7-200 conf.d]# nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful [root@hdss7-200 conf.d]# systemctl reload nginx [root@hdss7-200 conf.d]# cd /data/k8s-yaml/ [root@hdss7-200 k8s-yaml]# mkdir coredns -

到11机器,配置域名解析,编辑

/var/named/od.com.zone,将serial前滚一位,然后最下面添加以下内容:# 添加A记录 k8s-yaml A 10.4.7.200$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2021121003 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11 harbor A 10.4.7.200 k8s-yaml A 10.4.7.200[root@hdss7-11 named]# systemctl restart named [root@hdss7-11 named]# dig -t A k8s-yaml.od.com @10.4.7.11 +short 10.4.7.200

-

进入200机器,下载corndns镜像:

[root@hdss7-200 ~]# cd /data/k8s-yaml/ [root@hdss7-200 k8s-yaml]# docker pull coredns/coredns:1.6.1 [root@hdss7-200 k8s-yaml]# docker images |grep coredns [root@hdss7-200 k8s-yaml]# docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1 [root@hdss7-200 k8s-yaml]# docker push !$ -

200机器进入

/data/k8s-yaml/coredns目录,创建资源配置清单文件rbac.yaml,内容如下:rbac集群权限清单

apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: Reconcile name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.k8s.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults addonmanager.kubernetes.io/mode: EnsureExists name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system继续创建并编辑

cm.yaml,configmap配置清单:apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors log health ready kubernetes cluster.local 192.168.0.0/16 forward . 10.4.7.11 cache 30 loop reload loadbalance }编辑deploy控制器清单文件

dp.yaml:apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: coredns kubernetes.io/name: "CoreDNS" spec: replicas: 1 selector: matchLabels: k8s-app: coredns template: metadata: labels: k8s-app: coredns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns containers: - name: coredns image: harbor.od.com/public/coredns:v1.6.1 args: - -conf - /etc/coredns/Corefile volumeMounts: - name: config-volume mountPath: /etc/coredns ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile编辑service资源清单文件

svc.yaml:apiVersion: v1 kind: Service metadata: name: coredns namespace: kube-system labels: k8s-app: coredns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: coredns clusterIP: 192.168.0.2 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 - name: metrics port: 9153 protocol: TCP这里的cluster-ip为192.168.0.2是因为在kubelet的配置中,已经配置了cluster-dns的IP为192.168.0.2

-

在任意node节点上,应用资源配置清单(陈述式),这里在21机器执行:

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/cm.yaml [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/dp.yaml [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml[root@hdss7-21 ~]# kubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/coredns-6b6c4f9648-cmtnr 1/1 Running 0 11m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 11m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/coredns 1/1 1 1 11m NAME DESIRED CURRENT READY AGE replicaset.apps/coredns-6b6c4f9648 1 1 1 11m -

测试,在21机器上执行下面的命令测试coredns是否生效:

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.21 -n kube-public [root@hdss7-21 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public [root@hdss7-21 ~]# kubectl get svc -n kube-public NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-dp ClusterIP 192.168.28.186 <none> 80/TCP 4d20h [root@hdss7-21 ~]# dig -t A nginx-dp.kube-public.svc.cluster.local. @192.168.0.2 +short 192.168.28.186

ingress部署(服务暴露)

简介

前面通过coredns在k8s集群内部做了serviceName和serviceIP之间的映射,让我们通过serviceName就可以访问pod;

但在集群外部想要访问集群内部的资源,就要用到服务暴露的功能。

K8S常用的两种服务暴露方法:

-

使用NodePort的Service

nodeport的service原理相当于单口映射,将容器内的端口映射到宿主机的某个端口;

-

使用ingress资源

Ingress是K8S API标准资源之一,也是核心资源,是一组基于域名和URL路径的规则,把用户的请求转发到指定的service资源,可以将集群外部的请求流量,转发至集群内部,从而实现“服务暴露”。

我们使用Traefik作为ingress控制器,traefik是为了让部署微服务更加便捷而诞生的现代HTTP反向代理、负载均衡工具,它可以监听服务发现/基础架构组件的管理API,并在微服务被添加、移除、杀死、更新时都会感知,并能自动生成配置文件。

部署步骤

-

在200机器上,拉取traefiker(ingress控制器)镜像:

[root@hdss7-200 ~]# cd /data/k8s-yaml/ [root@hdss7-200 k8s-yaml]# mkdir traefik [root@hdss7-200 k8s-yaml]# docker pull traefik:v1.7.2-alpine [root@hdss7-200 k8s-yaml]# docker images |grep traefik [root@hdss7-200 k8s-yaml]# docker tag add5fac61ae5 harbor.od.com/public/traefik:v1.7.2 [root@hdss7-200 k8s-yaml]# docker push harbor.od.com/public/traefik:v1.7.2 -

200机器,到

/data/k8s-yaml/traefix/目录,为traefik准备资源配置清单(4个yaml):rbac.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: traefik-ingress-controller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: traefik-ingress-controller rules: - apiGroups: - "" resources: - services - endpoints - secrets verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controller subjects: - kind: ServiceAccount name: traefik-ingress-controller namespace: kube-systemds.yaml

apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: traefik-ingress namespace: kube-system labels: k8s-app: traefik-ingress spec: template: metadata: labels: k8s-app: traefik-ingress name: traefik-ingress spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 containers: - image: harbor.od.com/public/traefik:v1.7.2 name: traefik-ingress ports: - name: controller containerPort: 80 hostPort: 81 - name: admin-web containerPort: 8080 securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE args: - --api - --kubernetes - --logLevel=INFO - --insecureskipverify=true - --kubernetes.endpoint=https://10.4.7.10:7443 - --accesslog - --accesslog.filepath=/var/log/traefik_access.log - --traefiklog - --traefiklog.filepath=/var/log/traefik.log - --metrics.prometheussvc.yaml

kind: Service apiVersion: v1 metadata: name: traefik-ingress-service namespace: kube-system spec: selector: k8s-app: traefik-ingress ports: - protocol: TCP port: 80 name: controller - protocol: TCP port: 8080 name: admin-webingress.yaml

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: traefik-web-ui namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: traefik.od.com http: paths: - path: / backend: serviceName: traefik-ingress-service servicePort: 8080 -

在21或22机器上应用资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/rbac.yaml [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/svc.yaml [root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ingress.yamlnode节点重启docker服务,然后查看pod运行清空:

# 21/22都要执行 [root@hdss7-22 ~]# systemctl restart docker [root@hdss7-22 ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-6b6c4f9648-cmtnr 1/1 Running 0 24h 172.7.21.3 hdss7-21.host.com <none> <none> traefik-ingress-48v54 1/1 Running 0 74s 172.7.22.4 hdss7-22.host.com <none> <none> traefik-ingress-sxnkk 1/1 Running 0 74s 172.7.21.4 hdss7-21.host.com <none> <none> [root@hdss7-22 ~]# netstat -tlunp|grep 81 tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 42994/docker-proxy tcp6 0 0 :::81 :::* LISTEN 43000/docker-proxy -

在11/12机器配置反向代理,创建并编辑

/etc/nginx/conf/od.com.conf文件,配置如下:upstream default_backend_traefik { server 10.4.7.21:81 max_fails=3 fail_timeout=10s; server 10.4.7.22:81 max_fails=3 fail_timeout=10s; } server { server_name *.od.com; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } }[root@hdss7-11 conf.d]# nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful [root@hdss7-11 conf.d]# systemctl reload nginx -

11机器配置域名解析,编辑

/var/named/od.com.zone,前滚serial,增加A记录,然后重启named服务:$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2021121004 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11 harbor A 10.4.7.200 k8s-yaml A 10.4.7.200 traefik A 10.4.7.10 -

访问traefik.od.com: